Papers from CAL

Topics

Hardware Security

ReCon: Efficient Detection, Management, and Use of Non-Speculative Information Leakage

P. Aimoniotis, A. Kvalsvik, M. Själander, and S. Kaxiras

Proceedings of the ACM/IEEE International Symposium on Microarchitecture (MICRO), 2023

DOI:

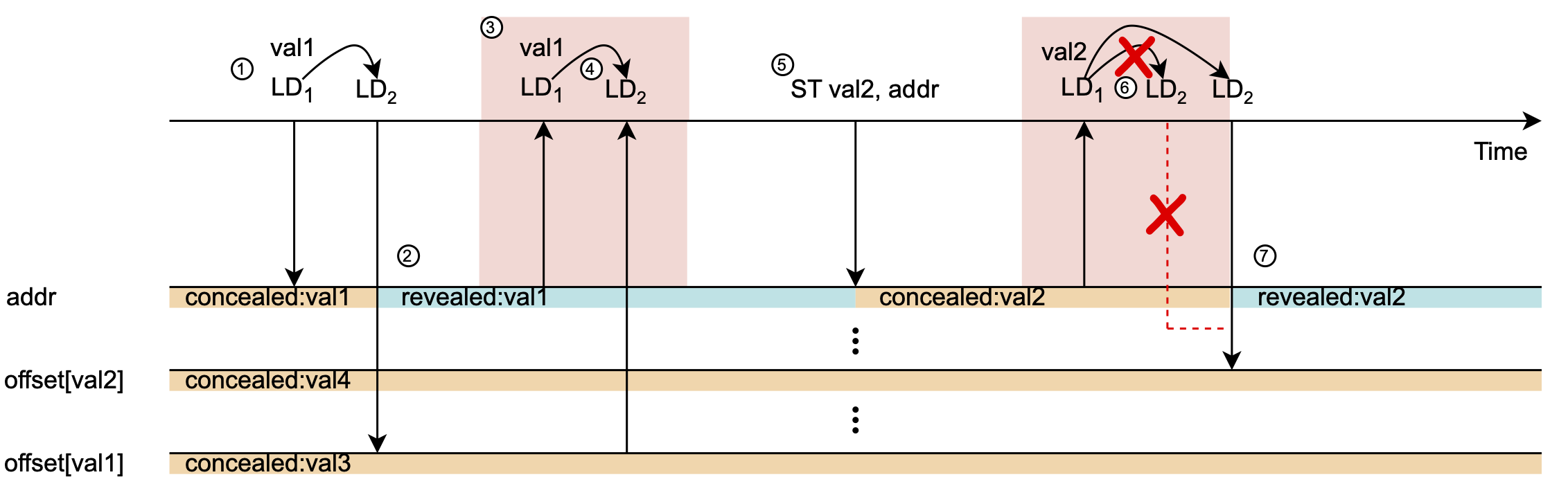

Abstract: In a speculative side-channel attack, a secret is improperly accessed and then leaked by passing it to a transmitter instruction. Several proposed defenses effectively close this security hole by either delaying the secret from being loaded or propagated, or by delaying dependent transmitters (e.g., loads) from executing when fed with tainted input derived from an earlier speculative load. This results in a loss of memory-level parallelism and performance.

A security definition proposed recently, in which data already leaked in non-speculative execution need not be considered secret during speculative execution, can provide a solution to the loss of performance. However, detecting and tracking non-speculative leakage carries its own cost, increasing complexity. The key insight of our work that enables us to exploit non-speculative leakage as an optimization to other secure speculation schemes is that the majority of non-speculative leakage is simply due to pointer dereferencing (or base-address indexing) — essentially what many secure speculation schemes prevent from taking place speculatively.

We present ReCon that: i) efficiently detects non-speculative leakage by limiting detection to pairs of directly-dependent loads that dereference pointers (or index a base-address); and ii) piggybacks non-speculative leakage information on the coherence protocol. In ReCon, the coherence protocol remembers and propagates the knowledge of what has leaked and therefore what is safe to dereference under speculation. To demonstrate the effectiveness of ReCon, we show how two state-of-the-art secure speculation schemes, Non-speculative Data Access (NDA) and speculative Taint Tracking (STT), leverage this information to enable more memory-level parallelism both in a single core scenario and in a multicore scenario: NDA with ReCon reduces the performance loss by 28.7% for SPEC2017, 31.5% for SPEC2006, and 46.7% for PARSEC; STT with ReCon reduces the loss by 45.1%, 39%, and 78.6%, respectively.

Doppelganger Loads: A Safe, Complexity-Effective Optimization for Secure Speculation Schemes

A. Kvalsvik, P. Aimoniotis, S. Kaxiras, and M. Själander

Proceedings of the ACM/IEEE International Symposium on Computer Architecture (ISCA), 2023

DOI: https://doi.org/10.1145/3579371.3589088

Abstract: Speculative side-channel attacks have forced computer architects to rethink speculative execution. Effectively preventing microarchitectural state from leaking sensitive information will be a key requirement in future processor design.

An important limitation of many secure speculation schemes is a reduction in the available memory parallelism, as unsafe loads (depending on the particular scheme) are blocked, as they might potentially leak information. Our contribution is to show that it is possible to recover some of this lost memory parallelism, by safely predicting the addresses of these loads in a threat-model transparent way, i.e., without worsening the security guarantees of the underlying secure scheme. To demonstrate the generality of the approach, we apply it to three different secure speculation schemes: Non-speculative Data Access (NDA), Speculative Taint Tracking (STT), and Delay-on-Miss (DoM).

An address predictor is trained on non-speculative data, and can afterwards predict the addresses of unsafe slow-to-issue loads, preloading the target registers with speculative values, that can be released faster on correct predictions than starting the entire load process. This new perspective on speculative execution encompasses all loads, and gives speedups, separately from prefetching.

We call the address-predicted counterparts of loads Doppelganger Loads. They give notable performance improvements for the three secure speculation schemes we evaluate, NDA, STT, and DoM. The Doppelganger Loads reduce the geometric mean slowdown by 42%, 48%, and 30% respectively, as compared to an unsafe baseline, for a wide variety of SPEC2006 and SPEC2017 benchmarks. Furthermore, Doppelganger Loads can be efficiently implemented with only minor core modifications, reusing existing resources such as a stride prefetcher, and most importantly, requiring no changes to the memory hierarchy outside the core.

required by NDA-P are highlighted in red and green for lock/unlock respectively.

Understanding Selective Delay as a Method for Efficient Secure Speculative Execution

C. Sakalis, S. Kaxiras, A. Ros, A. Jimborean, and M. Själander

IEEE Transactions on Computers (TC), 2020.

DOI: https://doi.org/10.1109/TC.2020.3014456

Abstract: Since the introduction of Meltdown and Spectre, the research community has been tirelessly working on speculative side-channel attacks and on how to shield computer systems from them. To ensure that a system is protected not only from all the currently known attacks but also from future, yet to be discovered, attacks, the solutions developed need to be general in nature, covering a wide array of system components, while at the same time keeping the performance, energy, area, and implementation complexity costs at a minimum. One such solution is our own delay-on-miss, which efficiently protects the memory hierarchy by i) selectively delaying speculative load instructions and ii) utilizing value prediction as an invisible form of speculation. In this article we dive deeper into delay-on-miss, offering insights into why and how it affects the performance of the system. We also reevaluate value prediction as an invisible form of speculation. Specifically, we focus on the implications that delaying memory loads has in the memory level parallelism of the system and how this affects the value predictor and the overall performance of the system. We present new, updated results but more importantly, we also offer deeper insight into why delay-on-miss works so well and what this means for the future of secure speculative execution.

Efficient Invisible Speculative Execution through Selective Delay and Value Prediction

C. Sakalis, S. Kaxiras, A. Ros, A. Jimborean, and M. Själander

Proceedings of the ACM/IEEE International Symposium on Computer Architecture (ISCA), 2019

DOI: https://doi.org/10.1145/3307650.3322216

Abstract: Speculative execution, the base on which modern high-performance general-purpose CPUs are built on, has recently been shown to enable a slew of security attacks. All these attacks are centered around a common set of behaviors: During speculative execution, the architectural state of the system is kept unmodified, until the speculation can be verified. In the event that a misspeculation occurs, then anything that can affect the architectural state is reverted (squashed) and re-executed correctly. However, the same is not true for the microarchitectural state. Normally invisible to the user, changes to the microarchitectural state can be observed through various side-channels, with timing differences caused by the memory hierarchy being one of the most common and easy to exploit. The speculative side-channels can then be exploited to perform attacks that can bypass software and hardware checks in order to leak information. These attacks, out of which the most infamous are perhaps Spectre and Meltdown, have led to a frantic search for solutions.

In this work, we present our own solution for reducing the microarchitectural state-changes caused by speculative execution in the memory hierarchy. It is based on the observation that if we only allow accesses that hit in the L1 data cache to proceed, then we can easily hide any microarchitectural changes until after the speculation has been verified. At the same time, we propose to prevent stalls by value predicting the loads that miss in the L1. Value prediction, though speculative, constitutes an invisible form of speculation, not seen outside the core. We evaluate our solution and show that we can prevent observable microarchitectural changes in the memory hierarchy while keeping the performance and energy costs at 11% and 7%, respectively. In comparison, the current state-of-the-art solution, InvisiSpec, incurs a 46% performance loss and a 51% energy increase.

Ghost loads: what is the cost of invisible speculation?

C. Sakalis, M. Alipour, A. Ros, A. Jimborean, S. Kaxiras, and M. Själander

Proceedings of the ACM International Conference on Computing Frontiers (CF), 2019

DOI: https://doi.org/10.1145/3310273.3321558

Abstract: Speculative execution is necessary for achieving high performance on modern general-purpose CPUs but, starting with Spectre and Meltdown, it has also been proven to cause severe security flaws. In case of a misspeculation, the architectural state is restored to assure functional correctness but a multitude of microarchitectural changes (e.g., cache updates), caused by the speculatively executed instructions, are commonly left in the system. These changes can be used to leak sensitive information, which has led to a frantic search for solutions that can eliminate such security flaws. The contribution of this work is an evaluation of the cost of hiding speculative side-effects in the cache hierarchy, making them visible only after the speculation has been resolved. For this, we compare (for the first time) two broad approaches: i) waiting for loads to become non-speculative before issuing them to the memory system, and ii) eliminating the side-effects of speculation, a solution consisting of invisible loads (Ghost loads) and performance optimizations (Ghost Buffer and Materialization). While previous work, InvisiSpec, has proposed a similar solution to our latter approach, it has done so with only a minimal evaluation and at a significant performance cost. The detailed evaluation of our solutions shows that: i) waiting for loads to become non-speculative is no more costly than the previously proposed InvisiSpec solution, albeit much simpler, non-invasive in the memory system, and stronger security-wise; ii) hiding speculation with Ghost loads (in the context of a relaxed memory model) can be achieved at the cost of 12% performance degradation and 9% energy increase, which is significantly better that the previous state-of-the-art solution.

Performance Analysis

TIP: Time-Proportional Instruction Profiling

Björn Gottschall, Lieven Eeckhout, and Magnus Jahre

Proceedings of the ACM/IEEE International Symposium on Microarchitecture (MICRO), 2023

DOI: https://doi.org/10.1145/3466752.3480058

Abstract: A fundamental part of developing software is to understand what the application spends time on. This is typically determined using a performance profiler, which essentially captures how execution time is distributed across the instructions of a program. At the same time, the highly parallel execution model of modern high-performance processors means that it is difficult to reliably attribute time to instructions — resulting in performance analysis being unnecessarily challenging.

In this work, we first propose the Oracle profiler, which is a golden reference for performance profilers. Oracle is golden because (i) it accounts every clock cycle and every dynamic instruction, and (ii) it is time-proportional, i.e., it attributes a clock cycle to the instruction(s) that the processor exposes the latency of. We use Oracle to, for the first time, quantify the error of software-level profiling, the dispatch-tagging heuristic used in AMD IBS and Arm SPE, the Last-Committing Instruction (LCI) heuristic used in external monitors, and the Next-Committing Instruction (NCI) heuristic used in Intel PEBS, resulting in average instruction-level profile errors of 61.8%, 53.1%, 55.4%, and 9.3%, respectively. The reason for these errors is that all existing profilers have cases in which they systematically attribute execution time to instructions that are not the root cause of performance loss. To overcome this issue, we propose Time-Proportional Instruction Profiling (TIP) which combines Oracle’s time attribution policies with statistical sampling to enable practical implementation. We implement TIP within the Berkeley Out-of-Order Machine (BOOM) and find that TIP is highly accurate. More specifically, TIP’s instruction-level profile error is only 1.6% on average (maximally 5.0%) versus 9.3% on average (maximally 21.0%) for state-of-the-art NCI. TIP’s improved accuracy matters in practice, as we exemplify by using TIP to identify a performance problem in the SPEC CPU2017 benchmark Imagick that, once addressed, improves performance by 1.93×.

TEA: Time-Proportional Event Analysis

Björn Gottschall, Lieven Eeckhout, and Magnus Jahre

Proceedings of the ACM/IEEE International Symposium on Computer Architecture (ISCA), 2023

DOI: https://doi.org/10.1145/3579371.3589058

Abstract: As computer architectures become increasingly complex and heterogeneous, it becomes progressively more difficult to write applications that make good use of hardware resources. Performance analysis tools are hence critically important as they are the only way through which developers can gain insight into the reasons why their application performs as it does. State-of-the-art performance analysis tools capture a plethora of performance events and are practically non-intrusive, but performance optimization is still extremely challenging. We believe that the fundamental reason is that current state-of-the-art tools in general cannot explain why executing the application's performance-critical instructions take time.

We hence propose Time-Proportional Event Analysis (TEA) which explains why the architecture spends time executing the application's performance-critical instructions by creating time-proportional Per-Instruction Cycle Stacks (PICS). PICS unify performance profiling and performance event analysis, and thereby (i) report the contribution of each static instruction to overall execution time, and (ii) break down per-instruction execution time across the (combinations of) performance events that a static instruction was subjected to across its dynamic executions. Creating time-proportional PICS requires tracking performance events across all in-flight instructions, but TEA only increases per-core power consumption by ~3.2 mW (~0.1%) because we carefully select events to balance insight and overhead. TEA leverages statistical sampling to keep performance overhead at 1.1% on average while incurring an average error of 2.1% compared to a non-sampling golden reference; a significant improvement upon the 55.6%, 55.5%, and 56.0% average error for AMD IBS, Arm SPE, and IBM RIS. We demonstrate that TEA's accuracy matters by using TEA to identify performance issues in the SPEC CPU2017 benchmarks lbm and nab that, once addressed, yield speedups of 1.28× and 2.45×, respectively.

AI at the Edge

BISDU: A Bit-Serial Dot-Product Unit for Microcontrollers

D. Metz, V. Kumar, and M. Själander

ACM Transactions on Embedded Computing Systems, 2023

DOI: https://doi.org/10.1145/3608447

Abstract: Low-precision quantized neural networks (QNNs) reduce the required memory space, bandwidth, and computational power, and hence are suitable for deployment in applications such as IoT edge devices. Mixed-precision QNNs, where weights commonly have lower precision than activations or different precision is used for different layers, can limit the accuracy loss caused by low-bit quantization, while still benefiting from reduced memory footprint and faster execution. Previous multiple-precision functional units supporting 8-bit, 4-bit, and 2-bit SIMD instructions have limitations, such as large area overhead, under-utilization of multipliers, and wasted memory space for low and mixed bit-width operations.

This paper introduces BISDU, a bit-serial dot-product unit to support and accelerate execution of mixed-precision low-bit QNNs on resource-constrained microcontrollers. BISDU is a multiplier-less dot-product unit, with frugal hardware requirements (a population count unit and 2:1 multiplexers). The proposed bit-serial dot-product unit leverages the conventional logical operations of a microcontroller to perform multiplications, which enables efficient software implementations of binary (Xnor), ternary (Xor), and mixed-precision [W × A] (And) dot-product operations.

The experimental results show that BISDU achieves competitive performance compared to two state-of-the-art units, XpulpNN and Dustin, when executing low-bit-width CNNs. We demonstrate the advantage that bit-serial execution provides by enabling trading accuracy against weight footprint and execution time. BISDU increases the area of the ALU by 68% and the ALU power consumption by 42% compared to a baseline 32-bit RISC-V (RV32IC) microcontroller core. In comparison, XpulpNN and Dustin increase the area by 6.9 × and 11.1 × and the power consumption by 3.8 × and 5.97 ×, respectively. The bit-serial state-of-the-art, based on a conventional popcount instruction, increases the area by 42% and power by 32%, with BISDU providing a 37% speedup over it.

BISMO: A Scalable Bit-Serial Matrix Multiplication Overlay for Reconfigurable Computing

Y. Umuroglu, L. Rasnayake, and M. Själander

Proceedings of the IEEE International Conference on Field-Programmable Logic and Applications (FPL), 2018

DOI: https://doi.org/10.1109/FPL.2018.00059

Abstract: Matrix-matrix multiplication is a key computational kernel for numerous applications in science and engineering, with ample parallelism and data locality that lends itself well to high-performance implementations. Many matrix multiplication-dependent applications can use reduced-precision integer or fixed-point representations to increase their performance and energy efficiency while still offering adequate quality of results. However, precision requirements may vary between different application phases or depend on input data, rendering constant-precision solutions ineffective. We present BISMO, a vectorized bit-serial matrix multiplication overlay for reconfigurable computing. BISMO utilizes the excellent binary-operation performance of FPGAs to offer a matrix multiplication performance that scales with required precision and parallelism. We characterize the resource usage and performance of BISMO across a range of parameters to build a hardware cost model, and demonstrate a peak performance of 6.5 TOPS on the Xilinx PYNQ-Z1 board.

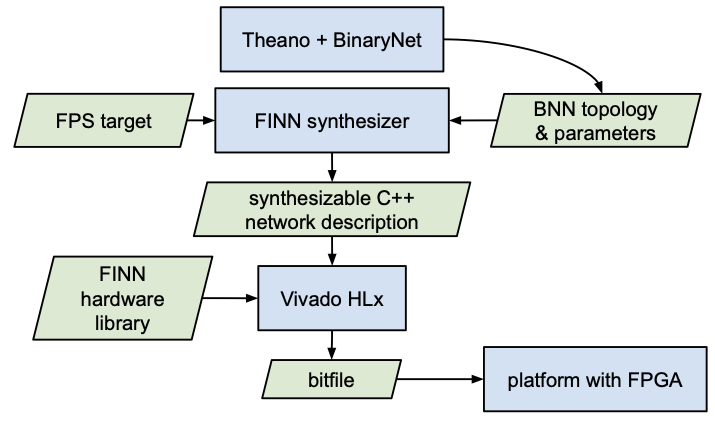

FINN: A Framework for Fast, Scalable Binarized Neural Network Inference

Yaman Umuroglu, Nicholas J. Fraser, Giulio Gambardella, Michaela Blott, Philip Leong, Magnus Jahre and Kees Vissers

Proceedings of the ACM/SIGDA International Symposium on Field-Programmable Gate Arrays (FPGA), 2023

DOI: https://doi.org/10.1145/3020078.3021744

Abstract: In a speculative side-channel attack, a secret is improperly accessed and then leaked by passing it to a transmitter instruction. Several proposed defenses effectively close this security hole by either delaying the secret from being loaded or propagated, or by delaying dependent transmitters (e.g., loads) from executing when fed with tainted input derived from an earlier speculative load. This results in a loss of memory-level parallelism and performance.

Research has shown that convolutional neural networks contain significant redundancy, and high classification accuracy can be obtained even when weights and activations are reduced from floating point to binary values. In this paper, we present FINN, a framework for building fast and flexible FPGA accelerators using a flexible heterogeneous streaming architecture. By utilizing a novel set of optimizations that enable efficient mapping of binarized neural networks to hardware, we implement fully connected, convolutional and pooling layers, with per-layer compute resources being tailored to user-provided throughput requirements. On a ZC706 embedded FPGA platform drawing less than 25 W total system power, we demonstrate up to 12.3 million image classifications per second with 0.31 μs latency on the MNIST dataset with 95.8% accuracy, and 21906 image classifications per second with 283 μs latency on the CIFAR-10 and SVHN datasets with respectively 80.1% and 94.9% accuracy. To the best of our knowledge, ours are the fastest classification rates reported to date on these benchmarks.

Computational Magnetic Metamaterials

Evolving Artificial Spin Ice for Robust Computation

Arthur Penty and Gunnar Tufte

International Journal of Unconventional Computing, 2023

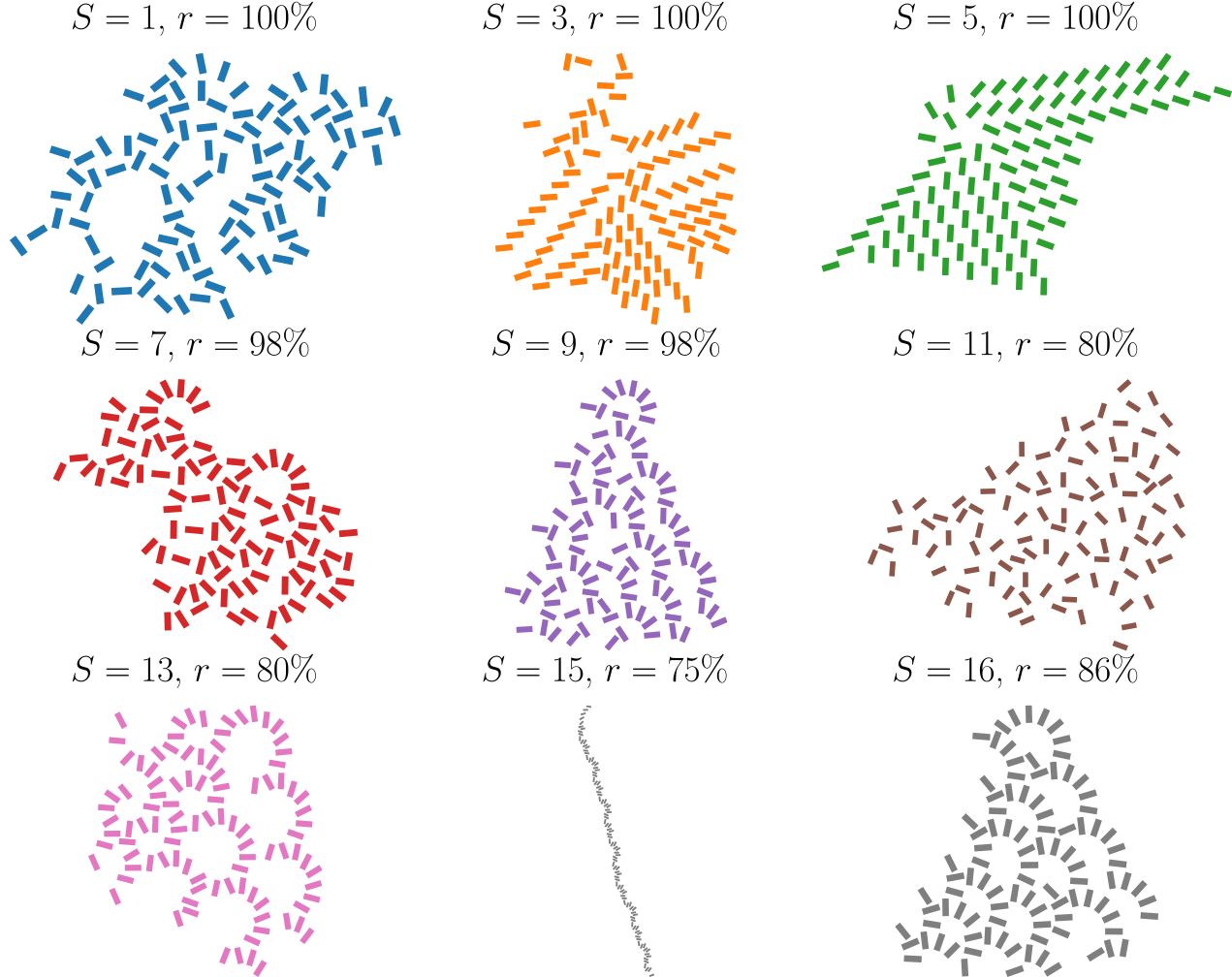

Abstract: Artificial spin ice is a magnetic metamaterial showing particular promise as a novel substrate for unconventional computing. While simulations are invaluable for investigating new computational substrates, results must be robust to the noise and disorder of the physical world for device realization. Here, we investigate the computational robustness of artificial spin ice towards fabrication disorder. Using an evolutionary search, we explore different geometries of artificial spin ice for robust computation. We show that by neglecting to consider disorder in the search, we obtain geometries that suffer greatly when disorder is introduced. We then demonstrate that by explicitly including disorder as part of the evolutionary search process, we are able to discover novel geometries that are robust against disorder. We also find that these geometries perform well on new instances of disorder, and when they fail, we see signs of graceful degradation.

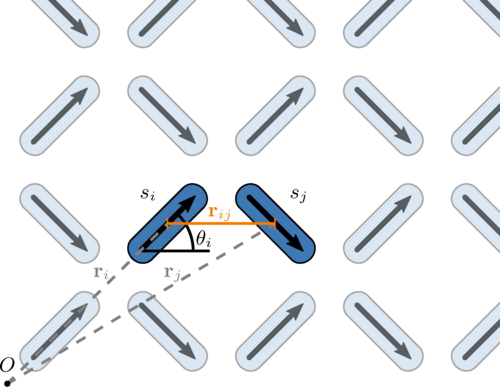

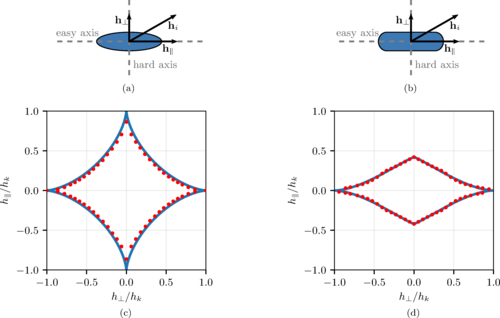

flatspin: A Large-Scale Artificial Spin Ice Simulator

Johannes H. Jensen, Anders Strømberg, Odd Rune Lykkebø, Arthur Penty, Jonathan Leliaert, Magnus Själander, Erik Folven, and Gunnar Tufte

Open Access: https://journals.aps.org/prb/abstract/10.1103/PhysRevB.106.064408

Abstract: We present flatspin, a novel simulator for systems of interacting mesoscopic spins on a lattice, also known as artificial spin ice (ASI). A generalization of the Stoner-Wohlfarth model is introduced, and combined with a well-defined switching protocol to capture realistic ASI dynamics using a point-dipole approximation. Temperature is modelled as an effective thermal field, based on the Arrhenius-Néel equation. Through GPU acceleration, flatspin can simulate the dynamics of millions of magnets within practical time frames, enabling exploration of large-scale emergent phenomena at unprecedented speeds. We demonstrate flatspin's versatility through the reproduction of a diverse set of established experimental results from literature. In particular, the field-driven magnetization reversal of “pinwheel” ASI is reproduced, for the first time, in a dipole model. Finally, we use flatspin to explore aspects of “square” ASI by introducing dilution defects and measuring the effect on the vertex population.