MSCA ITN Early Language Development in the Digital Age (e-LADDA) - Individual Projects

Individual projects

WORK PACKAGE 1 (WP1)

Typical Language Learning in the Digital Age

WP1 brings together multidisciplinary approaches to language learning with an emphasis on the role of digital tools in shaping learning in childhood (from infant to early school years). The projects in WP1 are complementary thematically and exploit the full range of methods available in cognitive science, from robotics to eye tracking, EEG and fMRI, both as research methods and as training platforms for junior researchers. The goal of WP1 is to provide an account of language learning in a digital ecology in its social, cognitive and neural foundations, and to identify factors that exert positive or negative influence on language outcomes. More concretely, we aim to extract and apply principles of word/concept learning in robot and touch-screen design, to model child speech with applications in technology, to establish maturational paths in multi-sensory skills and how they support language growth, and to establish the role of technology (e.g., mobile phone platforms, educational media) in both first and second language learning.

Individual projects

ESR1: Digitally automatized input in early word learning and vocabulary expansion

ESR1: Digitally automatized input in early word learning and vocabulary expansion

Supervisors: Prof Mani (UGOE), Prof Vulchanova (NTNU), Prof Cangelosi (UOM)

This project will examine the extent to which digitally automatized input and feedback enhance word learning success in toddlers, with regards to learning word-object associations and to subsequent learning of related words (or ‘vocabulary expansion’). We will combine eye-tracking and touch-screen methodologies. We will teach toddlers novel word-object associations (c.f. Mani & Plunkett, 2008) and monitor learning and retention success. The overall aims are to gain a better understanding of the role of feedback on learning and retention in early childhood, to understand the relation between learning of individual words and vocabulary expansion, and to develop a paradigm that could be used to digitally tailor input and feedback in ways that foster learning.

ESR2: Predictors of language learning across sensory modalities

ESR2: Predictors of language learning across sensory modalities

Supervisors: Prof Vulchanova (NTNU), Prof Mani (UGOE), Prof Baggio (NTNU), Dr Vulchanov (NTNU)

The aim of this project is to investigate a range of sensory predictors of first language (L1) acquisition in children. Language learning builds upon early auditory discrimination and categorization capacities in infants (Kuhl 2004). Little is known of the role of parallel processes in other modalities (e.g., vision, touch), which are less prominent for decoding speech signals, but are involved in providing concurrent evidence (e.g., on facial expressions) that is key to understanding communicative signals and, later, for interacting effectively with digital tools. We will carry out eye tracking and EEG/ERPs experiments using longitudinal designs to study the maturation of early sensory processing across modalities, and their (predictive) effects on vocabulary, grammar and digital interaction and learning capabilities.

ESR3: Child speech recognition and synthesis

ESR3: Child speech recognition and synthesis

Supervisors: Prof Vulchanova (NTNU), Prof Svendsen (NTNU), Prof Cangelosi (UOM)

This project aims to study, map and model characteristic features, at the acoustic and phonological levels, of speech produced by children. The project will apply state-of-the-art methods in language and speech technology, including machine learning, on large data sets of collected child speech. The data will be used for automatic recognition of child speech and text-to-speech synthesis of child-like voices with possible applications in robotics, child-robot interaction, and in the design of Augmented and Alternative Communication Devices.

ESR4: The impact of mobile phone platforms as an aid to learning a second language

ESR4: The impact of mobile phone platforms as an aid to learning a second language

Supervisors: Prof Coventry (UEA), Prof Spencer (UEA), Prof Baggio (NTNU)

This project aims to explore how mobile phone technology can assist second language learning, and to compare the effectiveness of learning a second language (L2) via symbolic input (word-to-word relations) vs grounded relations (words and images) using mobile phone social network platforms. A further goal is to explore the neural correlates of second language learning across modes of learning. Monolingual (English-speaking) children will learn a second language with support by mobile phone communication either using text alone, or text and images, vs no mobile phone support. The project will assess the effectiveness of mobile phone platforms for L2 learning. The two modes of mobile phone support will be compared to the no support condition via assessment of post-training L2 vocabulary, grammar, comprehension and production in written and spoken language. fMRI data will be used to assess changes in brain areas associated with the L2 in children following different learning modes.

ESR5: Learning foreign languages through educational media

ESR5: Learning foreign languages through educational media

Supervisors: Prof Mani (UGOE), Prof Vulchanova (NTNU), Prof Cain (ULANC) and Prof Allen (UB)

Against the background of theories that posit an important role for social interaction in learning, this project will examine the extent to which sustained exposure to media (e.g., videos and apps) in a non-dominant second and/or foreign language promotes specific aspects of language learning, i.e., comprehension and production of phonetic/phonological and lexical features of language. We expect that this project will contribute to the current understanding of influences of multimedia on specific aspects of second language learning (Vulchanova et al., 2015) , and will lead to new knowledge of the role of social interaction in theories of language learning, and to the development of appropriate media.

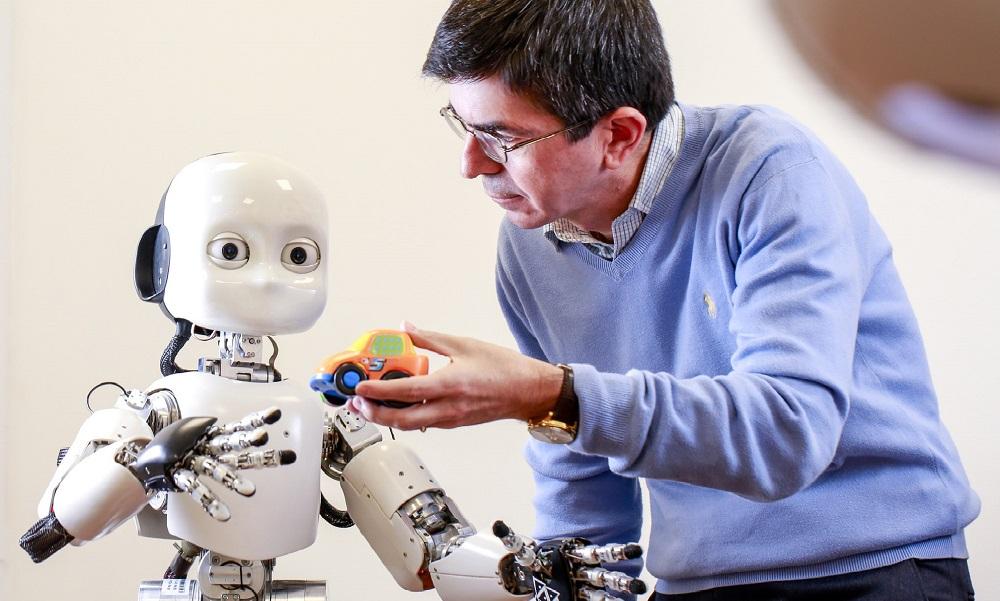

ESR6: Robot tutors for embodied language learning

ESR6: Robot tutors for embodied language learning

Supervisor: Prof Cangelosi (UOM), Dr Gabriel Skantze (Furhat), Prof Spencer (UEA)

This project aims to develop a cognitive architecture for a robotic tutor to teach abstract concepts (e.g., number concepts and abstract words) to pre-school children using embodiment approaches. We will conduct human-robot interaction experiments to test the benefits of robot tutors in learning abstract linguistic concepts via robot-supported embodiment strategies. Recent work in developmental and educational psychology (e.g., Alibali & Nathan 2011, Goldin-Meadow & Alibali 2013) shows the benefits of embodiment strategies (e.g. gestures) in learning linguistic and numerical concepts. Computational models have been proposed in developmental robotics (Cangelosi et al. 2016) to use gesture to teach robots abstract number concepts. This project will extend this developmental robotics approach to design a robot tutor’s cognitive control architecture that can handle a variety of embodiment strategies (e.g., gestures, finger counting, object manipulation) in tutoring sessions between children and humanoid robots (Pepper, iCub, Furhat).

ESR7: Usage analysis of digital and analog learning materials for children

ESR7: Usage analysis of digital and analog learning materials for children

Supervisors: Prof Šķilters (University of Latvia), Dr Signe Bāliņa (DZC), Prof Vulchanova (NTNU)

This project will perform usage analysis of digital and analog learning materials for children (e.g., in schools). A further aim is to generate a comprehensive collection of digital interfaces that can be used as a testing basis for further empirical studies or as a perceptually and cognitively supporting and highly user-friendly implementation template for different learning content. The focus will be on analysing the impact of digital and analog media format on the content comprehension from a developmental perspective. This work will follow up on past and current research and development projects within the software company Datozinību centrs (DZC) on the improvement of learning methods (including e-learning), accessibility and interoperability of multi-format digital resources. The role of Datorzīnību centrs in this project will be (a) providing programming work in generating interfaces and material that will be used empirical analyses (of both digital and analog format), and (b) together with international partners (NTNU) to conduct empirical research with participants on how format impacts development of different cognitive skills and abilities (i.e., reading, remembering, visuo-spatial cognition etc).

WORK PACKAGE 2 (WP2)

Digitally-Aided Intervention for Atypical Language Learners

WP2 aims to further explore the changing links between learning and digital tools in various contexts including education. We will extend the overall research theme in new directions, including the role of digital tools in designing and implementing interventions and assistive technology in atypical populations (e.g., autism, Down Syndrome, hearing impaired, low literacy groups). ESRs recruited for WP2 will be trained on a broad range of methods to be applied to research, diagnosis and intervention, data collection from vulnerable participants and children, as well as transferable skills between basic research, clinical research and education. Educational media are one bridge between WP1 and WP2, where they are investigated and harnessed in different contexts (language learning and literacy), with the aim to translate research from human participants to educational, clinical contexts and technological innovation.

ESR8: Language acquisition and robot-assisted early childhood intervention in autism

ESR8: Language acquisition and robot-assisted early childhood intervention in autism

Supervisors: Prof Saldaña (USE), Prof Vulchanova (NTNU), Dr Skantze (Furhat), Dr Vulchanov (NTNU)

This project aims to implement existing behavioural early childhood interventions for children with autism spectrum disorder for use in a robot-assisted environment, and to examine the impact and added-value of a robot-assisted early childhood intervention in autistic children. The University of Sevilla, Autismo Sevilla, ISOIN, and Furhat plan to develop a computer/robot-assisted environment for the application of existing early childhood interventions in autism. We will design and develop a randomized controlled trial (RCT) for the study of the robot-assisted environment, develop appropriate fidelity and outcome measures, analyse data collected via video recordings, computer records and behavioural testing.

ESR9: Semantic word learning from storybooks in typical and atypical language

ESR9: Semantic word learning from storybooks in typical and atypical language

Supervisors: Prof Cain (ULANC), Prof Allen (UB), Prof Vulchanova (NTNU), Prof Saldaña (USE)

The main objective of the project is to study the interaction of child characteristics and medium on young children’s ability to learn new vocabulary through shared storybook reading. We will determine which aspects of digital and traditional print media best support semantic word learning, and we will manipulate these features to study how semantic word learning differs amongst children with Down Syndrome, Autism vs typically developing peers. Features of digital and traditional books will be manipulated to determine the extent to which each format can be used to maximise communicative initiations, engagement, interaction, and to optimise learning.

ESR10: Using text-message to teach vocabulary to deaf people: A way to improve reading comprehension

ESR10: Using text-message to teach vocabulary to deaf people: A way to improve reading comprehension

Supervisor name: Prof Rodríguez-Ortiz and Prof Saldaña (USE), Prof Coventry (UEA)

The aims of this project are to apply interventions to improve vocabulary using text-messages of mobiles to deaf children and adolescents, and to assess the impact of these interventions in the growth of vocabulary and improvement of reading comprehension. This involves testing of language development, reading skills and other cognitive abilities with standardized tests of deaf children and adolescents, following recruitment through schools, support groups and deaf associations. The intervention to improve vocabulary is based on the Robust Vocabulary Instruction method (Beck, McKeown, & Kucan, 2002) and the use of images, sign language and synonyms accompanying the text-message. We expect that the intervention on vocabulary will influence reading comprehension, thus finding evidence of the role that vocabulary exerts on reading comprehension in deaf people.

ESR11: Handwriting or swiping? Gesturing modalities and its impacts on early literacy skills

ESR11: Handwriting or swiping? Gesturing modalities and its impacts on early literacy skills

Supervisor name: Prof Alves and Prof Leal (University of Porto), Prof Rodríguez-Ortiz (USE)

This project aims to understand the dynamic links between gestures, language and literacy by means of online measures of written production in typically and atypically developing children (e.g., Autism, low literacy groups). Our goal is to understand which gesturing modality (handwriting vs. swiping) and technology (tablets vs. pen and paper) is more effective in fostering early attempts to literacy. At the same time, we will establish evidence- based interventions, which will be available to further testing and development in other projects within the training network. Edutainment games that will collect production measures and adapt to individual users’ profiles will also be implemented.

ESR12: Mobile Application Development with Augmented Reality for Language Learning

ESR12: Mobile Application Development with Augmented Reality for Language Learning

Supervisor name: Mrs Pasterfield (SpongeUK), Prof Cangelosi (UOM), Prof Saldaña (USE)

This project aims to develop a new smartphone/tablet app, based on the latest Augmented Reality (AR) developed by SpongeUK, to teach language concepts to autistic children in natural environments. Both pictograms and words will be included in the design. Experiments will be conducted to validate the app, the feasibility of its use to teach vocabulary in real-life settings, and its benefits on learning outcomes.

ESR13: Apps for learning: A software study analysis of mobile applications for language development in children

ESR13: Apps for learning: A software study analysis of mobile applications for language development in children

Supervisor name: Dr Milan (UvA), Mrs Pasterfield (SpongeUK)

The project analyses the ecology and development of mobile apps for language acquisition in typical and atypical children from the perspective of developers, and will identify how notions and theories of language learning are translated into mobile applications for the market. Software ethnography, quantitative analysis and modelling, and interviews with software developers will be integrated, with the aim of producing a richer, data-rich understanding of how mobile use mediates language learning.

ESR14: Child-personalized adaptive language teaching through interactive social robot

ESR14: Child-personalized adaptive language teaching through interactive social robot

Supervisor name: Dr Gabriel Skantze (Furhat), Prof Mani (UGOE), Prof Cangelosi (UOM)

The project aims to develop a novel theoretical framework for multi-modal child robot interaction for language teaching in typically developing children and children with ASD, and a language-teaching application for robots that can interact continuously with children, and perform speech recognition, engagement, perspective taking, tracking of a child’s learning progress, face and gaze detection.

Horizontal Integrated Projects

The Integrated Projects are horizontal ESR-led, and peer-assisted projects cutting across Work Packages. e-LADDA puts significant emphasis on the fellows’ interdisciplinary training via bespoke opportunities of joint work by researchers from different disciplines on hands-on collaborative projects. Thus, in addition to the individual ESR projects, there are three projects, to be conducted collaboratively by the ESRs, which will involve all of the fellows working together to integrate various components and results from their own research into three key areas: gender differences and population-level variables; commercialisation of results through app development; and qualitative review of research. The horizontal projects will be led by a core group of two ESRs and two PIs for each project. These are identified as Integrated Projects, as follows:

IP1: Gender and population-level variables in language learning and use of digital tools

IP1: Gender and population-level variables in language learning and use of digital tools

In the first integrated project, we aim to harness data sets collected in other projects in WP1 and WP2, and explore possible differences between girls and boys in language learning outcomes and trajectories, in the underlying cognitive and neural mechanisms, and in the use of digital tools and their impact on language and literacy. This topic is re-attracting interest in the psycholinguistics and neuroscience communities. IP1 will require data exchange across teams in the ETN, and training in advanced data analysis skills, i.e., statistics on multiple data sets, meta-analysis. We aim to scale-up analyses across data sets and ESRs applying a ‘big data’ approach (harnessing expertise at NTNU, UEA, and UOM), i.e., constructing a large multi-dimensional set of all/most data collected, exploring it for possible hidden correlations between demographic and other population-level variables and dependent measures. Work on this project will be supported by the NTNU Big Data unit who collaborate with the Coordinator research group.

IP2: App development and education

IP2: App development and education

IP2 is related to training and innovation component of the ETN, and aims to bring together the ETN’s technology projects, where integration across disciplines and methods is most likely to lead to the development of novel apps that may be applied in educational settings. An example of interaction will involve SpongeUK as an app developer, NTNU as a contributor of speech technology tools for interface design, UOM with key results on human-robot/tech interaction in learning, Furhat Robotics, and SevilleU with expertise in education research. Over and above the individual projects, ESRs will work together in IP2 to produce a new App implementing different approaches to app design, e.g., gamification and augmented reality.

IP3: The pros and cons of digital technology for the human mind

IP3: The pros and cons of digital technology for the human mind

In this integrated project the ESRs will work collectively to produce a comprehensive literature review over positive and negative evidence for the impact of technology on cognition. As part of the review, the ESRs will produce a summary document as a working guide for educators and parents, which will help the ESRs develop skills of translational research and communication with broader audiences on this important societal topic.